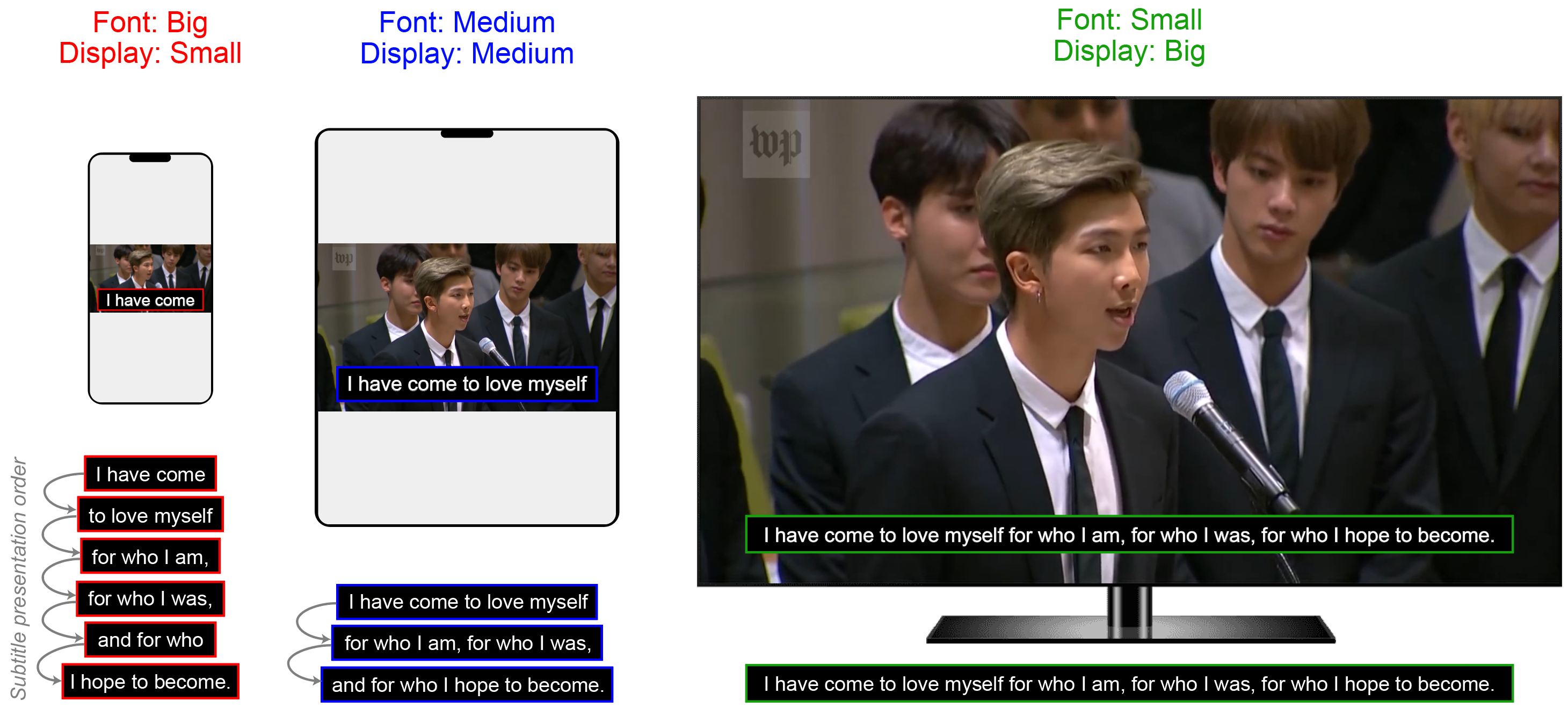

Given any font size of subtitles specified by a user, which may vary depending on the display size (due to PPI) and individual preferences, our method automatically generates an optimized presentation of subtitles. The method reconstructs the word composition displayed on the screen at once according to the given font size. To achieve optimal reconstruction that is synchronized with the video content, the duration of speech pause between adjacent words is utilized. Image source: https://www.youtube.com/watch?v=ZhJ-LAQ6e_Y